Downloading ALL the WADS

Published on by noot

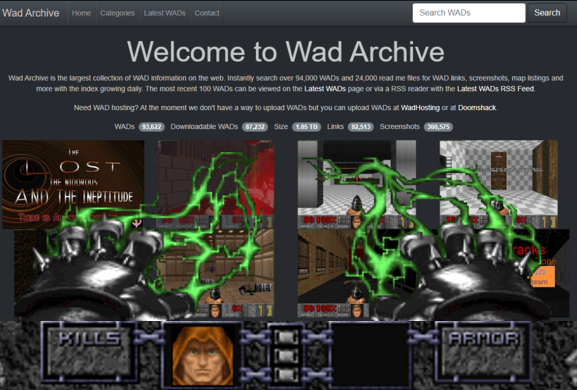

I recently landed on this interesting thread on DoomWorld that aimed to compile a bunch of old wads. After clicking through a bunch of the pages, I somehow, I landed on a defunct WadArchive site. WadArchive was a website that aimed to archive all of these Doom mods. Sucks that it was defunct now, but thank god — the site owners backed it up to archive.org 🙏!

Here is the link to the archive of the WadArchive site on archive.org.

This was awesome — I couldn’t wait to download everything and explore some of these old maps, and maybe relive a bit of my childhood.

I tried the torrent, and it didn’t include all of the files. I checked the comments, and yeah — some others had the same issue. So I look at the download options, and see that I can download them all individually (there are 257 of splits of a zip file that make up the entire archive).

No problem… I’ll just whip up some magic to grab all of the links, and download them in parallel. So here’s how I did that.

Scraping the URLs

Scraping the URLs from the page is step one.

I used the download all buttons on the archive page and eventually landed on the index page. This is the page that has all of the links we need to download. I’m not clicking 257 individual links, nor am I managing the downloads for them. Let’s fire up the dev console (F12 for chrome users) and enter the console. We can use some JavaScript to gather the links.

First of all, if you didn’t know this (I sure didn’t), you can use document.links to retrieve all of the anchor tags on the page as an HTMLCollection.

const server = 'https://archive.org/download/wadarchive/DATA/'

const urls = Array.from(document.links).filter(a => a.getAttribute('href').endsWith('.zip')).map(a => (`${server}${a.getAttribute('href')}`))We get the server url from the first part of the download link. The href value actually only contains the filename, hence why we need to append the two. Once we do this, we can actually copy the contents of the urls array to our system clipboard

copy(urls)Next, we paste it into vim, and format it to be only the url per line. If you are interested in the vim command to do remove the quotes and commas, here it is:

:%s/\"//g

:%s/\,//gI removed all of the extra whitespace that was copied over as well.

Then, before saving, to save you a headache, if you copied from a Windows host, and pasted into WSL, you’ll need to save the file with Unix line endings.

:set ff=unixThen save 🙂

Don’t stop all the Downloading!

Long story short, we’re going to use wget & parallel to download everything. If you don’t have either, there’s a good chance your distro’s repositories have both of them. If you are running a Debian flavor of linux, this command will install both:

sudo apt get update && sudo apt-get install wget parallelWe can now use the following command to start the download process. This will download in parallels of 8.

cat urls.txt | parallel --line-buffer -j8 wget --show-progress {}Happy downloading!